Image Texture/Perception-Based Haptic Model Assignment to 3D Mesh and Synthesizing Haptic Texture Model based on Haptic Affective Space (2012.09-current)

Researchers: Waseem Hassan, Arsen Abdulali, and Seokhee Jeon

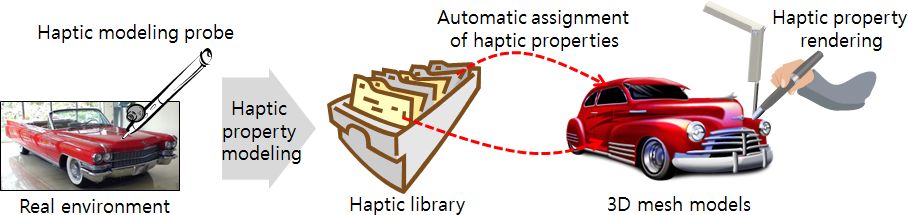

As the lack of haptic contents is one of the limitations of current haptics technology, this research aims at developing a hardware and software framework for efficient haptic model building based on actual measurement data. In this work, we develop and integrate data-driven haptic contents modeling methods for stiffness, friction, and surface texture, and construct “The Haptic Library.” Then, we seek for new perception and image-based techniques to automatically assign the acquired models into 3D mesh models. For more details about The Haptic Library, click here.

Then, we changed our focus toward haptic texture model synthesization. The main goal is to synthesize new virtual textures by manipulating the affective properties of already existing real life textures. To this end, two different spaces are established: two-dimensional “affective space” built from a series of psychophysical experiments where real textures are arranged according to affective properties (hard-soft, rough-smooth) and twodimensional “haptic model space” where real textures are placed based on features from tool-surface contact acceleration patterns (movement-velocity, normal-force). Another space, called “authoring space” is formed to merge the two spaces; correlating changes in affective properties of real life textures to changes in actual haptic signals in haptic space. The authoring space is constructed such that features of the haptic model space that were highly correlated with affective space become axes of the space. As a result, new texture signals corresponding to any point in authoring space can be synthesized based on weighted interpolation of three nearest real surfaces in perceptually correct manner.

This research is a part of Global Frontier project funded by NRF Korea.

- Waseem Hassan and Seokhee Jeon, “Evaluating Differences Between Bare-handed and Tool-Based Interaction in Perceptual Space”, 2016 IEEE Haptics Symposium (HAPTICS). IEEE, 2016.

- Waseem Hassan, Arsen Abdulali, and Seokhee Jeon, “Authoring New Haptic Textures Based on Interpolation of Real Textures in Affective Space,” IEEE Transactions on Industrial Electronics 2019, In press.

- Waseem Hassan, Arsen Abdulali, Muhammad Abdullah, Sang Chul Ahn, and Seokhee Jeon, “Towards Universal Haptic Library– Library-Based Haptic Texture Assignment Using Image Texture and Perceptual Space” IEEE Transaction on Haptics, vol. 11, No. 2, 2018.

Pneumatically Controlled Wearable Tactile Actuator for Multi-Modal Haptic Feedback (2021 – current)

Researcher: Ahsan Raza, Waseem Hassan, and Seokhee Jeon

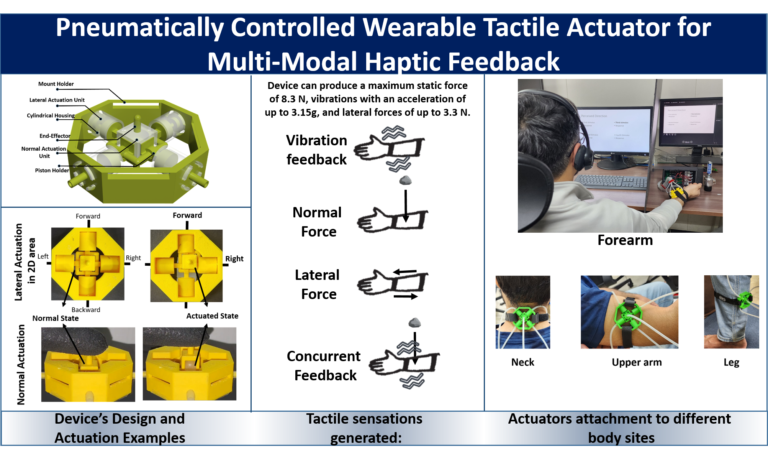

This paper introduces a wearable pneumatic actuator, designed for providing multiple types of tactile feedback using a single end-effector. To this end, the actuator combines a 3D-printed framework consisting of five 0.5 DOF soft silicon air cells with a pneumatic system to deliver a range of tactile sensations through a single end-effector. The actuator is capable of producing diverse haptic feedback, including vibration, pressure, impact, and lateral force, controlled by an array of solenoid valves. The design’s focus on multimodality in a compact and lightweight form factor makes it highly suitable for wearable applications. It can produce a maximum static force of 8.3 N, vibrations with an acceleration of up to 3.15 g, and lateral forces of up to 3.3 N. The efficacy of the actuator is demonstrated through two distinct user studies: one focusing on perception, where users differentiated between lateral cues and vibration frequencies, and another within a first-person shooter gaming scenario, revealing enhanced user engagement and experience. The actuator’s adaptability to body sites and rich multimodal haptic feedback enables it to find applications in virtual reality, gaming, training simulations, and more.

- Ahsan Raza, Waseem Hassan, and Seokhee Jeon. “Pneumatically Controlled Wearable Tactile Actuator for Multi-Modal Haptic Feedback“, IEEE Access, 2024

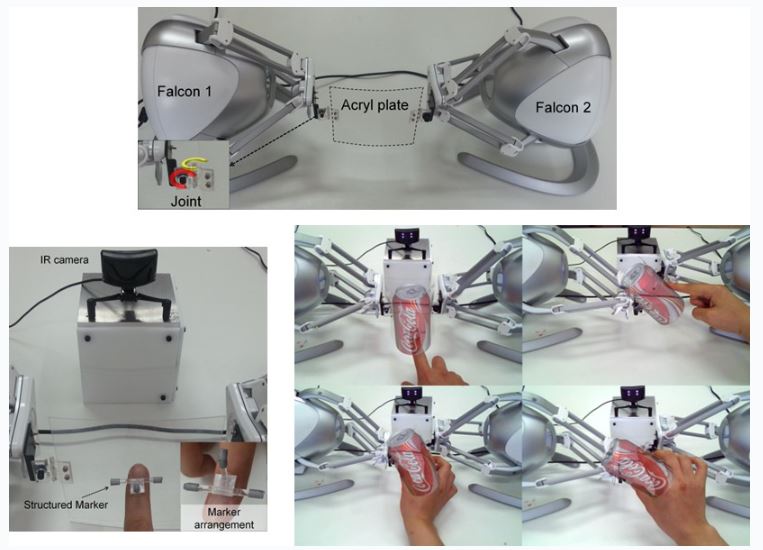

Model-Mediated Teleoperation for Remote Haptic Texture Sharing: Initial Study of Online Texture Modeling and Rendering (2022 – current)

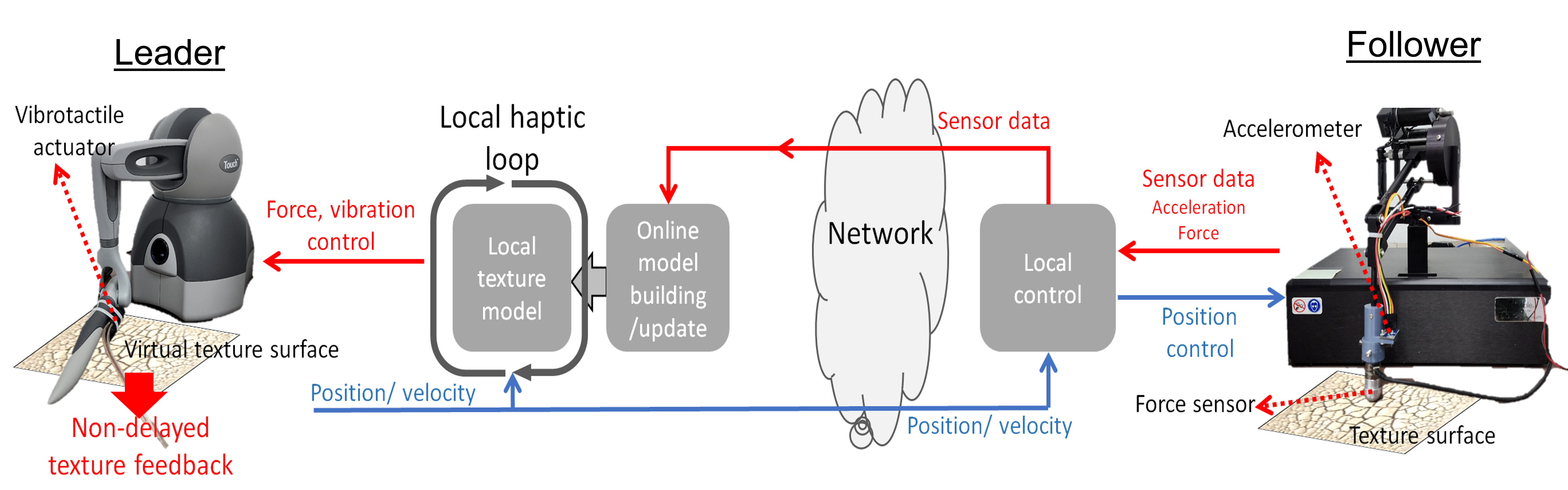

Researcher: Mudassir Ibrahim Awan, Tatyana Ogay, Waseem Hassan, Dongbeom Ko, Sungjoo Kang and Seokhee Jeon

This work presents the first model-mediated teleoperation (MMT) framework capable of sharing surface haptic texture. The follower side collects physical signals contributing to haptic texture perception, e.g., high-frequency acceleration, and streams them to the leader side. The leader side uses the signals to build and update a local measurement-based texture simulation model that reflects the remote surface. At the same time, the leader runs local simulation using the model, resulting in non-delayed, stable, and accurate feedback of texture. Considering that rendering haptic texture needs tougher real-time requirements, e.g., higher update rate and lower action-feedback latency, MMT can be a perfect platform for remote texture sharing. An initial proof-of-concept system supporting single and homogeneous surfaces is implemented and evaluated, demonstrating the potential of the approach.

- Mudassir Ibrahim Awan, T. Ogay, W. Hassan, D. Ko, S. Kang and Seokhee Jeon, “Model-Mediated Teleoperation for Remote Haptic Texture Sharing: Initial Study of Online Texture Modeling and Rendering,” 2023 IEEE International Conference on Robotics and Automation (ICRA), 2023, pp. 12457-12463, doi: 10.1109/ICRA48891.2023.10160503.

Vibrotactile push latch effects on the automotive handleless doors

Researcher: Arsen Abdulali and Seokhee Jeon

Wearable Soft Pneumatic Ring with Multi-Mode Controlling for Rich Haptic Effects

Researcher: Aishwari Talhan, Hwangil Kim, and Seokhee Jeon

In this work, we present a light-weight, multi-mode, and finger-wearing haptic device based on pneumatic actuation of soft bladder. Pneumatic actuation technique has the potential to tackle the issues of conventional wearable haptic device that are often bulky and limited to provide single kind of feedback, e.g., vibrotactile only or pressure only. The prototype we developed is a ring-shaped bladder made by soft silicone connected to valves and a small compressed air source. The ring actuator consists of two layers: the inner (active) layer of stretchable silicone and an outer (passive) layer of non-stretchable silicone. When air-pressure blows inside the cavity, the stretchable material allows inflation inward and pushes the user’s skin, while the non-stretchable material remains unchanged hence size and the shape of the actuator would maintain. We also present variable rendering modes; the device provides not only static pressure to the skin, but also generates high-frequency vibrotactile feedback up to 250 Hz based on fast controlling of valves. We experimentally formulated the relation between valve opening and the frequency and amplitude characteristics of the feedback in order to ensure accurate rendering. To our knowledge, vibrotactile feedback generated by soft pneumatic actuator has not been presented elsewhere. In the demonstration, we will present the scenario where this wearable soft actuator shows various haptic effects, combined with the VR/AR application.

Generalizing Pneumatic based Augmented Haptics Palpation Training Simulator

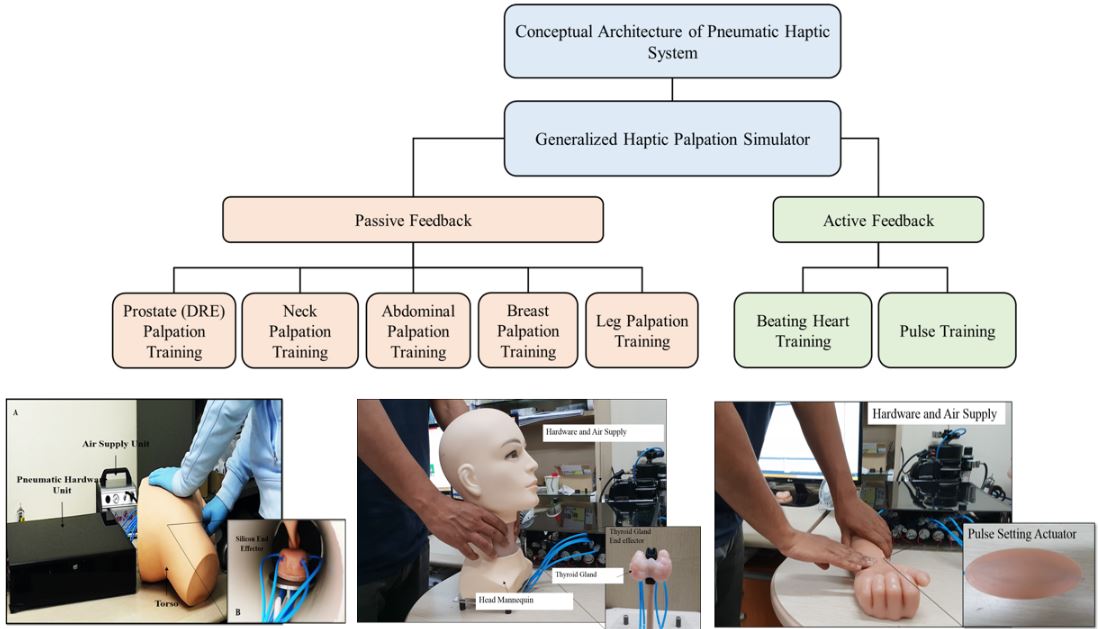

Researcher: Aishwari Talhan and Seokhee Jeon

Augmented haptics enables us to experience in enhanced reality with high fidelity via combining the real and virtual environment. The numerous applications of this technology are medical, telemedicine, entertainment, telerobotics, education. Palpation is the most preliminary procedure used to perceive, diagnose, and treat physical problems by a physician. We have analyzed the two different categories of palpation procedure, such as: passive and active. In passive palpation, the physician palpates localized abnormalities which previously occurred at the location, for example, tumor, and lumpy tissue conditions. Whereas, in active (dynamic) palpation, it takes place in the real-time perception, such as pulse and heartbeats.

In the previous work, we have demonstrated augmented haptic prostate palpation (DRE) simulator using pneumatic actuation. In this paper, we intend to extend our work towards the generalizing the approach for palpation training simulator using augmented haptics and pneumatic. The complete system consists of original electro-pneumatic hardware, various silicone-made actuators, body mannequins, and supportive library of related abnormalities of multiple body parts and organs. In a video demonstration, we will show active and passive feedback scenarios related to three types of palpation training simulators. For example, 1) prostate palpation, 2) thyroid gland (neck), and 3) pulse (see Fig. 1).

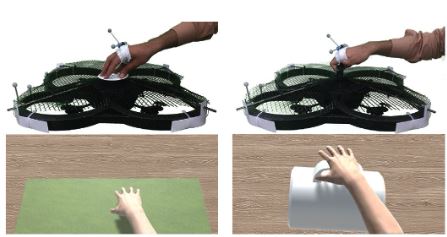

HapticDrone: An Encountered-Type Kinesthetic Haptic Interface with Controllable Force Feedback

Researcher: Muhammad Abdullah, Minji Kim, Seokhee Jeon

HapticDrone is our new approach to transform a drone into a force-reflecting haptic interface. While our earlier work proved the concept, this paper concretizes it by implementing accurate force control along with effective encountered-type stiffness and weight rendering for 1D interaction as a first step. To this end, generated force is identified with respect to drone’s thrust command, allowing a precise control of force. Force control is combined with tracking of drone and hand, turning the HapticDrone into an ideal end-effector for the encountered-type haptics and making the system ready for more sophisticated physics simulation.

Stiffness and weight of a virtual object is our first target of the simulation. Conventional stiffness and gravity simulation is merged into the encountered-type position control scheme, allowing a user to feel the softness and weight of an object. We further confirmed the feasibility by proving that the system fulfills the physical performance requirements commonly needed for an encountered-type haptic interface, i.e., force rendering bandwidth, accuracy, and tracking performance.

Haptic-Enabled Digital Rectal Examination Simulator Using Pneumatic Bladder

Researcher: Aishwari Talhan and Seokhee Jeon

The currently available prostate palpation simulators are based on either a physical mock-up or pure virtual simulation. Both cases have their inherent limitations. The former lacks flexibility in presenting abnormalities and scenarios because of the static nature of the mock-up and has usability issues because the prostate model must be replaced in different scenarios. The latter has realism issues, particularly in haptic feedback, because of the very limited performance of haptic hardware and inaccurate haptic simulation. This paper presents a highly flexible and programmable simulator with high haptic fidelity. Our new approach is based on a pneumatic-driven, property-changing, silicone prostate mock-up that can be embedded in a human torso mannequin. The mockup has seven pneumatically controlled, multi-layered bladder cells to mimic the stiffness, size, and location changes of nodules in the prostate. The size is controlled by inflating the bladder with positive pressure in the chamber, and a hard nodule can be generated using the particle jamming technique; the fine sand in the bladder becomes stiff when it is vacuumed. The programmable valves and system identification process enable us to precisely control the size and stiffness, which results in a simulator that can realistically generate many different diseases without replacing anything. The three most common abnormalities in a prostate are selected for demonstration, and multiple progressive stages of each abnormality are carefully designed based on medical data. A human perception experiment is performed by actual medical professionals and confirms that our simulator exhibits higher realism and usability than do the conventional simulators.

Data-Driven Modeling of Haptic Properties

Researchers: Arsen Abdulali, Sunghoon Yim, Seokhee Jeon

Pure data-driven haptic modeling and rendering is one of the emerging techniques in the field of haptics. This approach records necessary signals generated during manual or automated palpation of a target object, e.g., high frequency vibrations through active stroking of a target surface for haptic texture, and uses them in rendering

for approximating the responses of the target surface under given user’s interaction. It

can effectively handle diverse and complex behaviors with less computational load

without knowledge about the object and system. Consequently, it is one of the most

relevant approaches for applications requiring high haptic realism.

In our research, we targeted three haptic properties for the data-driven modeling; stiffness, friction, and haptic texture. The followings are our research outcomes for the three properties.

Data-driven modeling/rendering of stiffness and friction

- Sunghoon Yim, Seokhee Jeon, and Seungmoon Choi, “Data-Driven Haptic Modeling and Rendering of Viscoelastic and Frictional Responses of Deformable Objects,” IEEE Transaction on Haptics, Accepted.

- Sunghoon Yim, Seokhee Jeon, and Seungmoon Choi, “Data-Driven Haptic Modeling and Rendering of Deformable Objects Including Sliding Friction,” In Proceedings of the IEEE World Haptics Conference (WHC), pp. 305-312, 2015.

Data-driven modeling/rendering of haptic texture

- Arsen Abdulali and Seokhee Jeon, “Data-Driven Modeling of Anisotropic Haptic Textures: Data Segmentation and Interpolation,” In Proceedings of EuroHaptics, pp. 228-239, 2016.

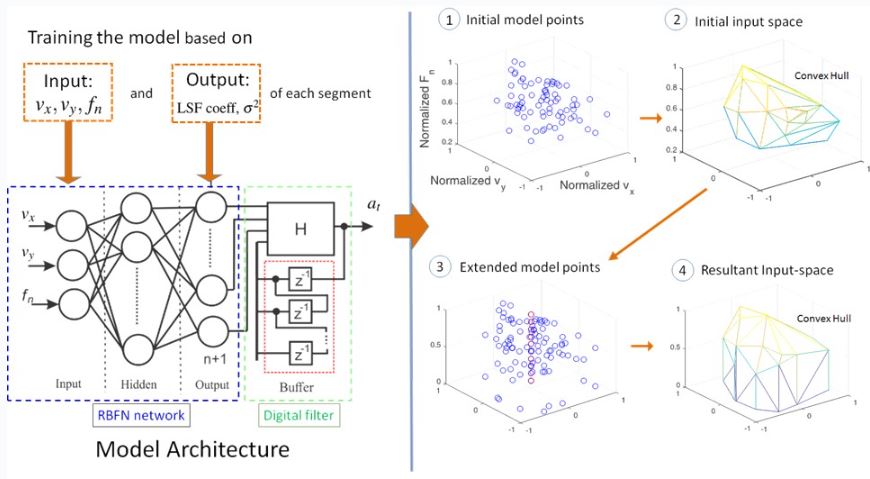

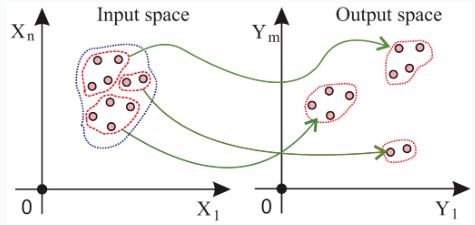

Data-driven modeling/rendering of object dynamics

Data-driven haptic modeling is an emerging technique where contact dynamics are simulated and interpolated based on a generic input-output matching model identified by data sensed from interaction with target physical objects. in this research we present a new algorithm for the sample selection where the variances of output are observed for selecting representative input-output samples in order to ensure the quality of output prediction. The main idea is that representative pairs of input-output are chosen so that the ratio of the standard deviation to the mean of the corresponding output group does not exceed an application-dependent threshold.

- Arsen Abdulali, Waseem Hassan, and Seokhee Jeon, “Stimuli-Magnitude-Adaptive Sample Selection for Data-Driven Haptic Modeling,” MDPI Entropy, vol. 18, no. 222, 2016.

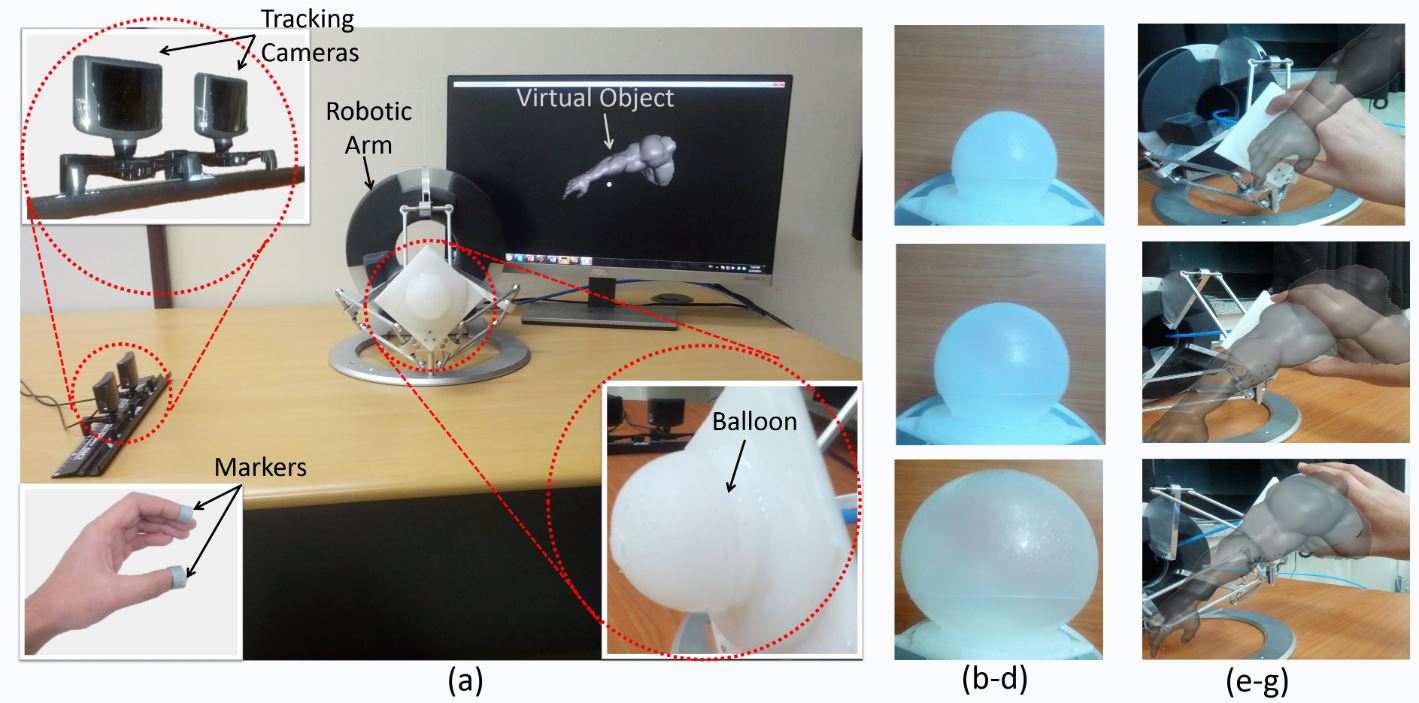

Property-Changing Haptic Handle for Encountered-Type Haptic Device (2012.09-current)

Researchers: Noman Akbar, Hongchae Lee, and Seokhee Jeon

Previous approaches on encountered-type haptic interfaces suffer from limited generality. Customized and pre-shaped end effectors can only represent a specific object, limiting the flexibility of the system. Manually or automatically replacing the end effector can be one option, but it is still not feasible when surface properties should be frequently changed. An ideal solution is to introduce a “universal end effector” where its haptic properties, such as shape and stiffness, can be systematically altered to effectively cope with virtual objects with different haptic property.

Research outcomes

- Noman Akbar and Seokhee Jeon, “Encountered-Type Haptic Interface for Grasping Interaction with Round Variable Sized Objects via Pneumatic Balloon,” in Proceedings of EuroHaptics, 2014 (to be presented).

- Hongchae Lee, Noman Akbar, and Seokhee Jeon, “Haptic Rendering of Curved Surface by Bending an Encountered-Type Flexible Plate,” in Proceedings of Korea Computer Congress 2014.

- Seokhee Jeon, “Haptic Rendering of Curved Surface by Bending an Encountered-Type Flexible Plate,” IEICE Information and Systems, Accepted.

Haptic Augmented Reality (2009-current)

Researchers: Sunghoon Yim, Matthias Harders, Seungmoon Choi, and Seokhee Jeon

As augmented reality (AR) enables a real space to be transformed to a semi-virtual space by providing a user with the mixed sensations of real and virtual objects, haptic AR does the same for the sense of touch; a user can touch a real object, a virtual object, or a real object augmented with virtual touch. Visual AR has relatively mature technology and is being applied to diverse practical applications such as surgical training, industrial manufacturing, and entertainment. In contrast, the technology for haptic AR is quite young and poses a great number of new research problems ranging from modeling to rendering in terms of both hardware and software. We have been examining the potential of this haptic AR technology. Our long-term research aims at developing a systematic methodology for modulating the haptic properties of a real object with the aid of a haptic interface, i.e., a “haptic AR toolkit.” Please see below for our major research outcomes.

Research outcomes

-

- Sunghoon Yim, Seokhee Jeon, and Seungmoon Choi, “Normal and Tangential Force Decomposition and Augmentation Based on Contact Centroid,” AsiaHaptics, 2014 (Honorable mention – Final candidate for the best demo award ).

- Seokhee Jeon, Seungmoon Choi, and Matthias Harders, “Haptic Augmentation in Soft Tissue Interaction,” in Multisensory Softness, edited by M. Di Luca, Springer (in print)

This book chapter summarizes our major research results with more focus on the stiffness modulation.

- Seokhee Jeon and Matthias Harders, “Haptic Tumor Augmentation: Exploring Multi-Point Interaction,” IEEE Transactions on Haptics, Accepted, 2014.

Our second attempt to apply the stiffness modulation algorithms to a breast tumor palpation scenario. This time, we focused on the palpation using two point contacts. Special attentions was on the realistic recreation of mutual effect between the points.

- Seokhee Jeon, “Haptically Assisting Breast Tumor Detection by Augmenting Abnormal Lump,” IEICE Transactions on Information & Systems, vol. E97-D, no. 2, pp. 361-365, 2014.

This paper reports the use of haptic augmented reality in breast tumor palpation. In general, lumps in the breast are stiffer than surrounding tissues, allowing us to haptically detect them through self-palpation. The goal of the study is to assist self-palpation of lumps by haptically augmenting stiffness around lumps. The key steps are to estimate non-linear stiffness of normal tissues in the offline preprocessing step, detect areas that show abnormally stiffer responses, and amplify the difference in stiffness through a haptic augmented reality interface. The performance of the system was evaluated in a user-study, demonstrating the potential of the system.

- Seokhee Jeon and Matthias Harders, “Extending Haptic Augmented Reality: Modulating Stiffness during Two-Point Squeezing,” In Proceedings of the IEEE Haptics Symposium (HS), pp. 141-146, 2012 (Oral presentation; acceptance rate = 26%).

In this paper we generalize the approach by enabling a user to grasp, lift, and manipulate an object via two interaction points. Modulated stiffness can be explored by squeezing an object. To this end, two haptic interfaces equipped with force sensors are employed to render the additional virtual forces of the augmentation at the two interaction points. We introduce the required extended algorithms and evaluate the performance in a pilot user study.

- Seokhee Jeon, Jean-Claude Metzger, Seungmoon Choi, and Matthias Harders, “Extensions to Haptic Augmented Reality: Modulating Friction and Weight,” In Proceedings of the World Haptics Conference (WHC), pp. 227-232, 2011 (Oral presentation; acceptance rate = 16.6%).

In this paper, we extend our framework to cover further haptic properties: friction and weight. Simple but effective algorithms for estimating and altering these properties have been developed. The first approach allows us to change the inherent friction between a tool tip and a surface to a desired one identified in an offline process. The second technique enables a user to perceive an altered weight when lifting an object at two interaction points.

- Seokhee Jeon and Seungmoon Choi, “Real Stiffness Augmentation for Haptic Augmented Reality,” Presence: Teleoperators and Virtual Environments, vol. 20, no. 4, pp. 337-370, 2011.

This paper is the extended version of our previous Haptic Symposium paper. In Haptics Symposium 2010, we presented earlier results that included basic rendering algorithms and physical performance evaluation for the ball bearing tool. The present paper is significantly extended in various aspects, especially in the inclusion of a more general solid tool with associated algorithms and physical performance evaluation, the perceptual performance assessment of the whole haptic AR system, and in-depth discussions about further research issues.

- Seokhee Jeon and Seungmoon Choi, “Stiffness Modulation for Haptic Augmented Reality: Extension to 3D Interaction,” in Proceedings of the IEEE Haptics Symposium (HS), pp. 273-280. 2010 (Recipient of Best Demo Award).

Our previous work assumed an 1D interaction of tapping for stiffness perception as an initial study. In this paper, we extend the system so that a user can interact with a real object in any 3D exploratory pattern while perceiving its augmented stiffness. A series of algorithms are developed for contact detection, deformation estimation, force rendering, and force control. Their performances are thoroughly evaluated with real samples. A particular focus has been on minimizing the amount of preprocessing such as geometry modeling.

- Seokhee Jeon and Seungmoon Choi, “Haptic Augmented Reality: Taxonomy and an Example of Stiffness Modulation,” Presence: Teleoperators and Virtual Environments, vol. 18, no. 5, pp. 387-408, 2009.

This paper is an extended version of our previous EuroHaptics paper in the following aspects: (1) An extended taxonomy encompassing both visual and haptic AR with a thorough survey of previous studies and associated classification, (2) Evaluation of the force control algorithm using another haptic interface, (3) Perceptual assessment of our haptic AR system, and (4) Presentation of many open research issues in haptic AR.

- Seokhee Jeon and Seungmoon Choi, “Modulating Real Object Stiffness for Haptic Augmented Reality,” Lecture Notes on Computer Science (EuroHaptics 2008), vol. 5024, pp. 609-608, 2008 (Acceptance rate = 36%).

As an initial study, this paper investigates the feasibility of haptically modulating the feel of a real object with the aid of virtual force feedback, with the stiffness as a goal haptic property. All required algorithms for contact detection, stiffness modulation, and force control are developed, and their physical performances are evaluated.

- US patent pending, “Apparatus and Method for Providing Haptic Augmented Reality,” US 12/394,032, 2009.02.26.

Previous Researches

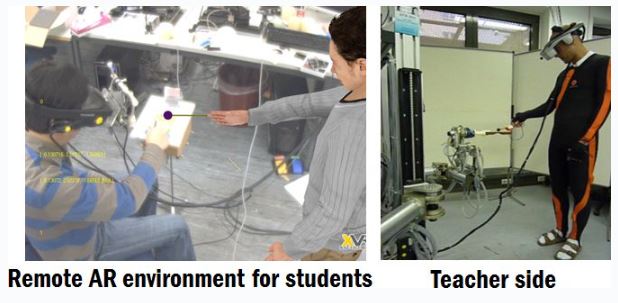

BEAMING Project (2010-2012)

Overall goal of this project is to build a framework to make a digital copy of real objects and to “beam” it to a remote site, allowing a collaborator to interact with it. As a participating researcher, constructing and beaming the haptic sensation was the focus. This project was a part of EU Project.

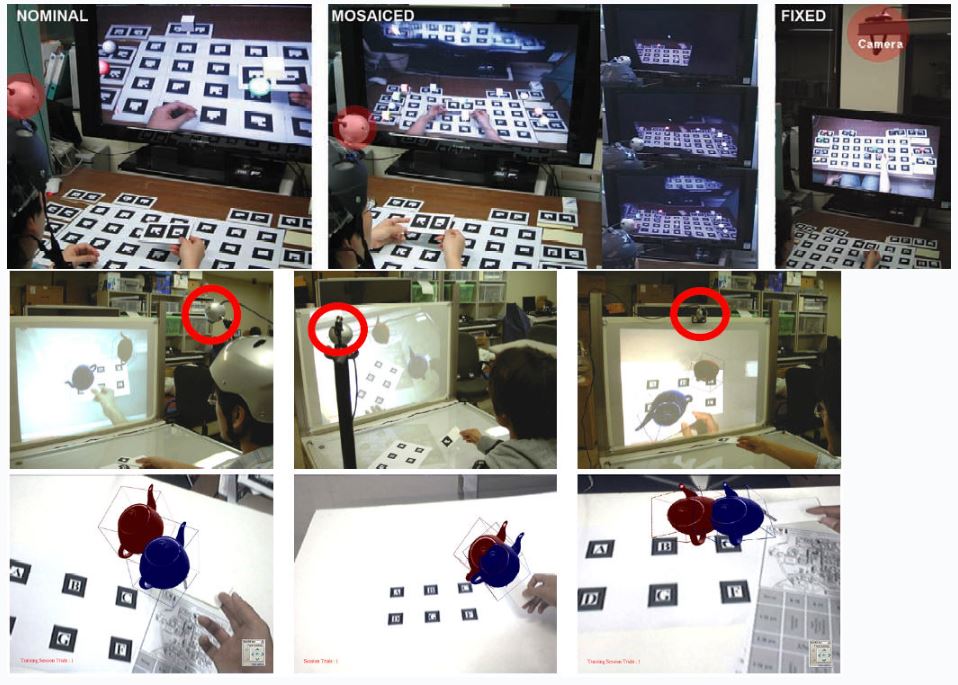

Inconsistencies in Augmented Reality Systems (2006-2008)

One of our current research thrusts is the evaluation of various aspects of VR/AR/MR interfaces. For instance, there are many cases where the interfaces and the corresponding interaction objects do not match in certain aspects such as interaction timing, view/interaction direction, multimodal feedback, etc. This may be due to lack of technology, inappropriate metaphor, missing modality, and so on. In addition to evaluations, we seek computational solutions to improve the these situations. For instance, in the picture, we assess the usability with regards to various placements of cameras (which capture the real environment in a desktop AR setting), and also look for ways to apply image warping or mosaicing techniques to allow the placement of cameras at user-convenient locations.

Research outcomes

- Seokhee Jeon, Hyeongseop Shim,Gerard J. Kim, “Viewpoint Usability for Desktop Augmented Reality,” International Journal of Virtual Reality , Vol.5. No.3, pp.33-39 2006.

- Seokhee Jeon, Gerard J. Kim “Mosaicing a Wide Geometric Field of View for Effective Interaction in Augmented Reality” Proc. of IEEE ISMAR, 2007.

- Seokhee Jeon, Gerard J. Kim “Providing a Wide Field of View for Effective Interaction in Desktop Tangible Augmented Reality” Proc. of IEEE VR, 2008.

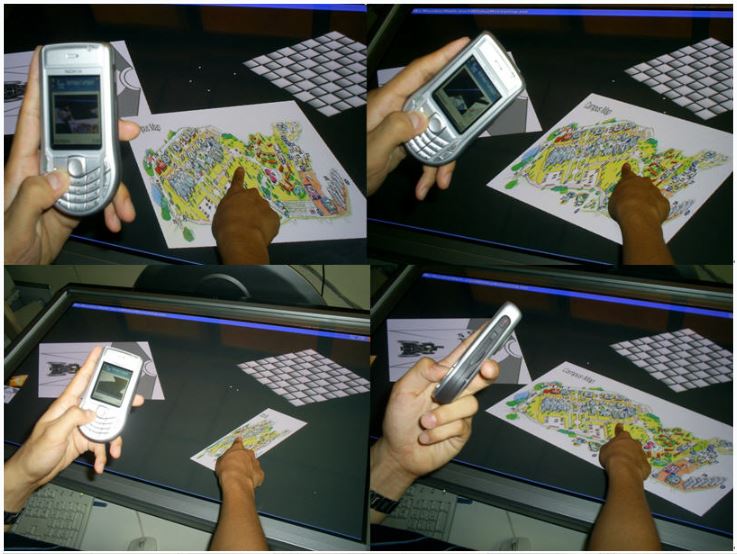

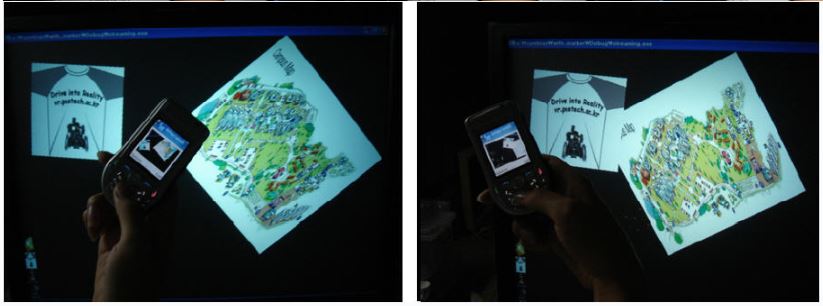

Interactions with Large Ubiquitous Displays Using Camera Equipped Mobile Phones (2005-2010)

In the ubiquitous computing environment, people will interact with everyday objects (or computers embedded in them) in ways different from the usual and familiar desktop user interface. One such typical situation is interacting with applications through large displays such as televisions, mirror displays, and public kiosks. With these applications, the use of the usual keyboard and mouse input is not usually viable (for practical reasons). In this setting, the mobile phone has emerged as an excellent device for novel interaction. This research topic introduces user interaction techniques using a camera-equipped hand-held device such as a mobile phone or a PDA for large shared displays. In particular, we consider two specific but typical situations (1) sharing the display from a distance and (2) interacting with a touch screen display at a close distance. Using two basic computer vision techniques, motion flow and marker recognition, we show how a camera-equipped hand-held device can effectively be used to replace a mouse and share, select, and manipulate 2D and 3D objects, and navigate within the environment presented through the large display.

Research outcomes

- Seokhee Jeon, Jane Hwang, Gerard J. Kim, and Mark Billinghurst, “Interaction with Large Ubiquitous Displays Using Camera-Equipped Mobile Phones,” Personal and Ubiquitous Computing, vol. 12, no. 2, pp. 83-94, 2010.

- Seokhee Jeon, Gerard J. Kim, and Mark Billinghurst, “Interacting with a Tabletop Display Using a Camera Equipped Mobile Phone,” Lecture Notes on Computer Science (HCI International 2007), vol. 4551, pp. 336-343, 2007.

- Seokhee Jeon, Jane Hwang, Gerard J. Kim, and Mark Billinghurst, “Interaction Techniques in Large Display Environments using Hand-held Devices,” In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, pp. 100-103, 2006.

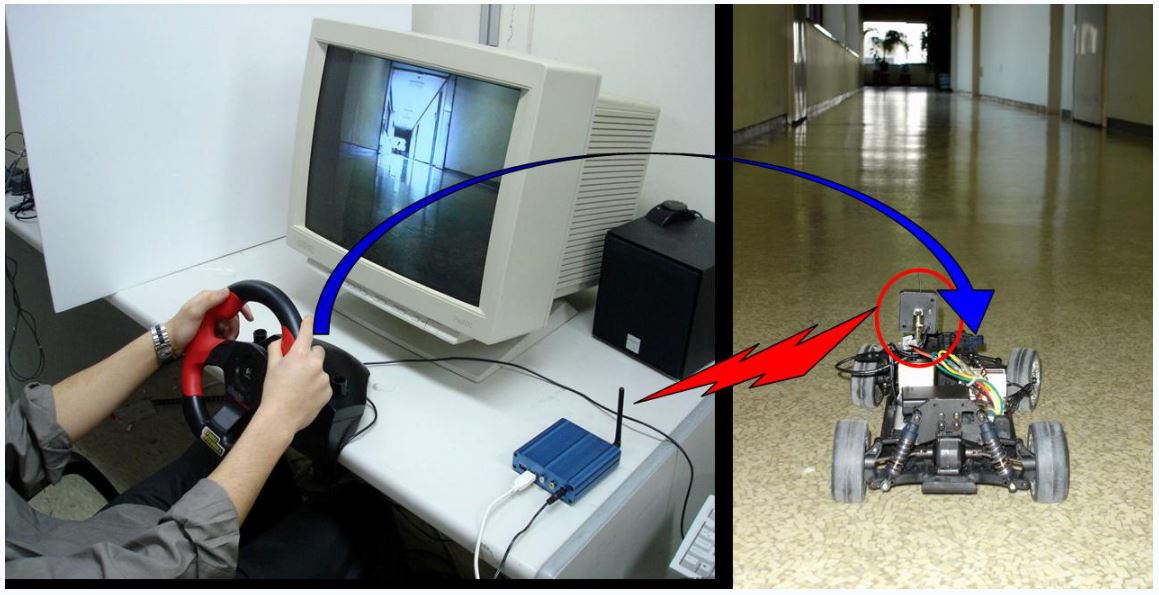

Cart for Speed (2005-2006)

Research outcomes

- Yongjin Kim, Jaehoon Jung, Seokhee Jeon, Sangyoon Lee, and Gerard J. Kim, “Telepresnce Racing Game”, SIGCHI ACE 2005.